AI RAG and ...everything...

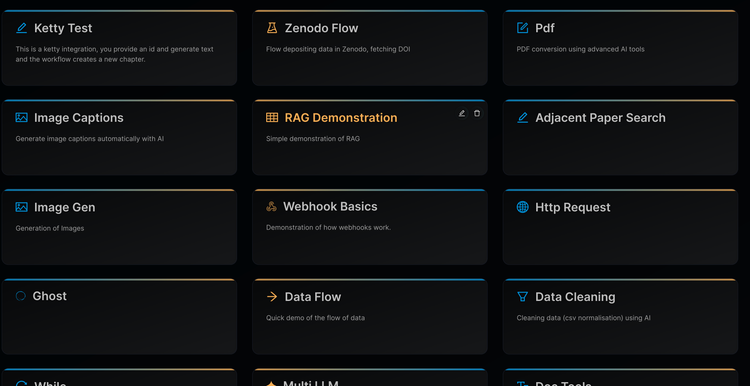

I've been keeping up with the AI whirlwind as much as I can. There is so much to keep up with but what is noticeable is that there are a few trends that seem to be maturing. One of the most dominant is the AI RAG (Retrieval Augmented Generation) model. Consequently we are working on integrations with this strategy on all Coko products and the results are very encouraging.

Understanding AI RAG Integration

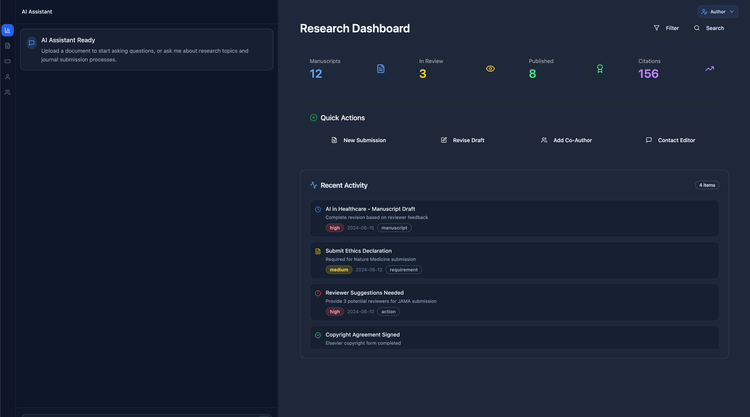

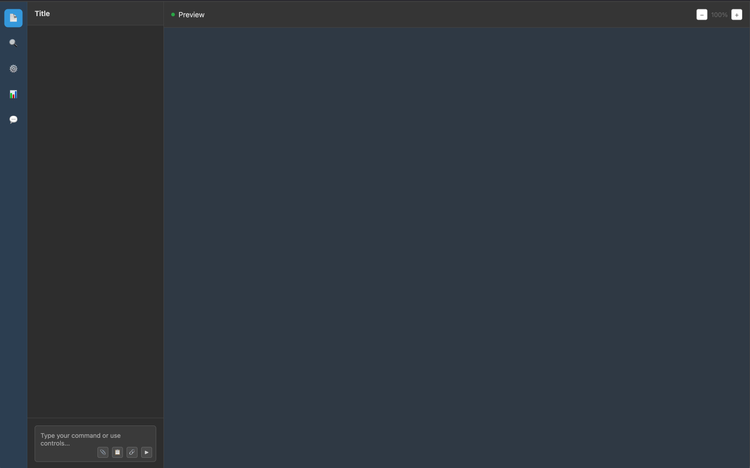

At its core, the AI RAG Integration equips an AI assistant, functioning within a production environment such as a word processor like Wax, to access and leverage documents uploaded by users into their personal or project-specific repositories. Once a document is uploaded, it becomes a part of the referenceable material that AI tools can actively consult to extract relevant information.

This functionality proves particularly invaluable for users involved in content creation, such as authors of books or academic papers. For instance, if a user requires a specific statistic or fact during the writing process, the AI assistant can swiftly retrieve this data from the stored documents and seamlessly incorporate it into the work in progress, enhancing both the efficiency and accuracy of content generation.

The way that it works is essentially when a document is uploaded to the RAG repo the following occurs:

- first the file is split into parts - lets call them "components" (there are many ways to do this, currently we are taking a very basic approach and splitting at headers).

- each 'component' is then indexed as 'vector space representations of the chunk's mathematical properties'. Which is to say, the textual components (it can also be done for images) are turned into a matrix of sorts.

When a user creates a prompt we turn that also into a 'matrix' and match it with the vectored components in the repo. We then take the corresponding plain text components and send them to the LLM together with the original prompt (also in plan text). The LLM processes and sends back a response.

Simple really...

We will add tools also for determining how to split a document as this can greatly effect the results. We will also expose the 'chunking' to the user in 'advanced modes' so users can see if the chunking really makes sense and so users can also learn about how RAG works and how their set up is generating the results they see. Transparency in all this is essential for us all to learn and become more proficient as these new approaches develop.

The Importance of Open Source and Flexibility

Initially, to develop this feature, we are using OpenAI to leverage their API as it is an easy to use pathway forward for prototyping and production code. However, famously OpenAI is not open, and we are committed to maintaining an open-source approach. Consequently we are also building in an abstraction that allows any LLM to be targeted. Although 'real' open source LLMs are few and far between we give users the choice of what technologies they wish to integrate with (which could also mean using enterprise LLMs within an organizations own tech environment).

Also, since we are integrating this functionality with Ketty (see below) via Wax (see above!) using the existing AI Assistant, we can then bring this process easily into any Coko product (all Coko products use Wax).

Open Source at it's best. Reusable, transparent, free.

Future Directions and Collaborations

Looking ahead, I'm excited about the potential of this technology to aid academic and research communities. We are exploring possibilities to integrate AI RAG with platforms like Kotahi, aiming to assist researchers in synthesizing and writing scientific content. This initiative could dramatically streamline the research process, making it more efficient and effective.

Initially however, we are integrating the AI Rag technologies with the AI Design Studio and Ketty. By enabling seamless integration of information retrieval and content generation, we are opening up new possibilities for our communities to create.

As we continue to develop and refine this approach, we remain committed to a factual, humble approach that prioritizes user needs and open-source innovation. So as we learn at Coko I'll pass on info here about this approach.

© Adam Hyde, 2024, CC-BY-SA

Image public domain, created by MidJourney from prompts by Adam.

Member discussion