Context Is the Next Frontier for Vibe Coding

As artificial intelligence becomes more integrated into software development, one challenge is starting to stand out: context management. According to Nikolay Savinov of Google DeepMind (https://goo.gle/3Bm7QzQ), the future of AI-assisted coding may hinge less on AI's ability to tackle harder problems and more on how well AI can understand and manage the context around the code it's working with.

Today's large language models can already assist with writing functions, fixing bugs, and generating boilerplate. However, they're still constrained by how much information they can "remember" at once—what's called the context window. Even with recent expansions, these windows are too narrow to capture the full state of a codebase or the nuanced relationships between its components. As a result, AI tools often "forget" what they just helped write, or fail to understand how new code fits into the broader architecture. This is a major limitation for Vibe Coding.

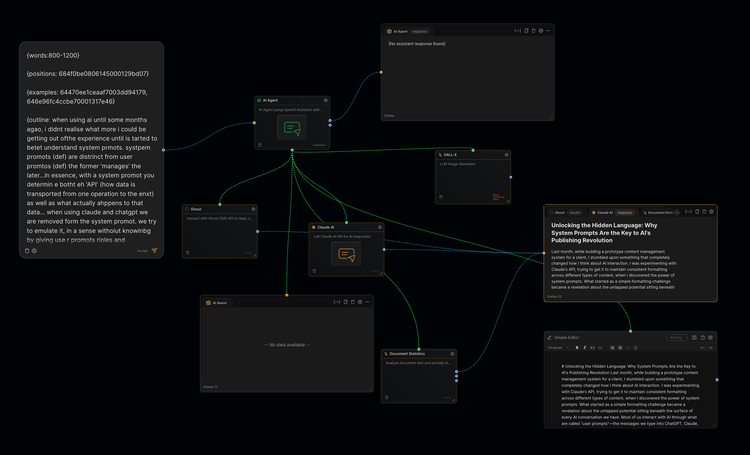

That said, it's not a showstopper. In practice, many of the current limitations of context aren't solved by the model itself, but by the agent—the wrapper or orchestration layer around the LLM. These agents "move" through the context—navigating files, reloading prompts, summarizing code chunks, and stitching things together to give the impression of a coherent memory. It's a bit of a patchwork, but it works well enough in many cases.

If you're not a developer, you can still get a lot done by learning how to work with these boundaries. Often it just takes patience—repeating prompts, clarifying instructions, or nudging the AI back on track until the problem is solved. It's not seamless, but for prototyping and light development, it's already remarkably effective.

However, Savinov suggests a future where AI agents can efficiently manage large-scale context on their own—understanding not just individual files, or small collections of files, but how all the parts of a system interrelate. In other words, an AI that can think more like a senior engineer who has an intimate understanding of a code base and able to weave it all together.

Savinov believes the first challenge to solve is the reliability of the context window. We've all experienced or heard of others extracting information from documents and getting unreliable results. LLMs need to improve their context reliability first, which will itself enhance performance. This improvement, combined with longer context windows, would represent an enormous lift for coding environments.

Savinov argues that improvements in reliability and volume will come soon and make an enormous impact, but he also says this alone won't be enough. Smarter algorithms and tooling (better agents) will also be needed to organize and prioritize relevant information so that the LLM isn't just sifting through noise.

The implications go well beyond code. Similar techniques could make AI systems more effective across many domains—pulling insights from entire video archives, answering complex research questions across thousands of documents, or supporting long-running creative projects without losing track of earlier threads. It also raises intriguing creative possibilities: could LLMs begin surfacing connections between seemingly unrelated ideas? With improved context handling, the boundary between what's "relevant" and what's "inspiring" may start to blur—opening the door to unexpected associations that enrich the development process in ways human thinkers might not anticipate.

Of course, none of this comes for free. This kind of context-aware AI would demand not just smarter software, but better infrastructure. Larger memory capacities, faster storage, and improved inference runtimes will all play a role.

Still, the direction is clear: the next leap in vibe coding productivity won't come just from more capable models, but from ones that can remember and reason across time.

Member discussion