Building in the Age of AI: Architecture for a Moving Target

The question that keeps coming up in conversations with developers and publishers is deceptively simple: "Should we build with AI?" But this question reveals a fundamental misunderstanding of the moment we're in. It's not about whether to build with AI—it's about how to build systems in an environment where the technological landscape shifts every six months.

The Problem We're Not Talking About

For decades, the hardest decision in software development was choosing between feature richness and maturity. Do you adopt the exciting new framework with innovative capabilities but questionable longevity? Or do you stick with the battle-tested solution that's been around for years? The gamble was always measured in decades. Will React still be here in ten years? Will Node? These were reasonable questions because the pace of change was predictable. You either chose conservatively and pragmatically, or your choice was a bet (I've made, won, and lost my share).

That world is gone.

When the Horizon Moves Every Six Months

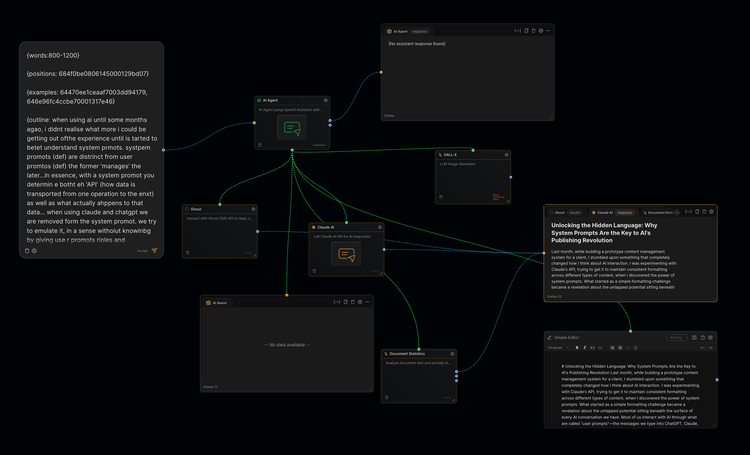

Consider RAG (Retrieval-Augmented Generation). Just two years ago, it emerged as the promised solution to LLM memory and domain-specific problems. Within months, the limitations became apparent. The landscape exploded into dozens of RAG methodologies, hybrid approaches, and then a resurrection of knowledge graphs (see GraphRAG). All of this happened in roughly 24 months.

Or look at agents. Fourteen months ago, they were barely functional curiosities. Today, they're proliferating with multiple frameworks and protocols—MCP, A2A, and others. MCP was introduced in November 2024 and already feels dated. A2A launched in April 2025 and people talk about it like it's foundational knowledge everyone should already possess.

The horizon isn't measured in decades anymore. It's measured in months.

The Deeper Question

The real question isn't "when is the right time to build?" or "what should we build with?" These frame the problem in old terms. The actual challenge is architectural: how do we build systems that can survive in an environment of permanent, accelerating change?

We're not building with deterministic programming anymore. We're building with semantic machines—systems that process meaning rather than just text. This is a fundamental shift in the nature of computation itself.

"Any application that can be written in JavaScript, will eventually be written in JavaScript"

- Jeff Atwood

There's a version of Atwood's Law for AI emerging: any system that can be enhanced with semantic processing will be enhanced with semantic processing. Not because AI can do everything (it can't), and not because deterministic methods are obsolete (they're not). But because the combination of deterministic logic and semantic understanding produces capabilities that neither can achieve alone.

Yes, semantic machines have limitations and failure modes. Yes, there's still a lot to be solved. But when it comes to building with AI, the critical difference isn't in what they can or can't do now—it's in how fast they evolve. I can't think of a system where, given enough time, semantic machines won't eventually become part of the underlying processing core. The question isn't whether your system will integrate AI. The question is how your architecture changes when it does, and what architectural choices you make when that time comes.

The Answer Isn't Waiting

The instinct is to wait for things to settle down. To let the dust clear before making architectural decisions. This is a mistake. The pace of change is not a temporary condition—it's a defining characteristic of AI development. We're not in a period of disruption that will eventually stabilize. We're in a new permanent state where disruption is the baseline.

Waiting for stability is like living in Wellington and waiting for the wind to stop before building a windmill (NZ joke).

Architecture for Permanent Change

The answer lies in how we architect systems, not in which technologies we choose. We need to build systems with adaptability embedded in their DNA. This means:

Systems with swappable cores. The fundamental processing logic—whether it's a RAG pipeline, an agent framework, or a semantic markup system—must be designed to be replaced in hours or days, not months. Your architecture should treat these components as interchangeable modules, not as load-bearing walls.

Abstraction at the interface, not the implementation. Don't abstract away the AI—abstract away the commitment to any particular AI approach. Your system should care about "semantic search" or "content generation" as capabilities, not about "this specific RAG implementation" or "that particular model."

Finite lifespans for technical choices. Plan from day one for replacement. Every technical decision should come with an expiration date and a migration path. If you can't imagine replacing a component within months, you've integrated it too deeply.

Modular by default. The systems we built in the deterministic era could be monolithic because the foundations were stable. The systems we build in the AI era must be modular because we know the foundations will shift.

What This Means in Practice

Consider a content processing pipeline. Instead of building a monolithic system that does semantic enrichment, metadata extraction, and format conversion in one tightly-coupled flow, build it as separate services. Each service communicates through well-defined interfaces. When a better approach to semantic analysis emerges (and it will), you swap out that one component without touching the rest.

This isn't about future-proofing. You can't future-proof against a future you can't predict. It's about making change cheap. It's about treating architectural flexibility not as a nice-to-have, but as the primary design constraint.

The New Constraint

The old trade-off was features versus maturity. That trade-off has collapsed. Now there's only one constraint that matters: how quickly can your system adapt?

This doesn't mean building less capable systems. It means building systems where capability isn't welded to implementation. You can build something that does remarkable things with today's AI and can swap in tomorrow's approaches—but only if you design for change from the beginning.

The systems that will survive aren't the ones that do the most impressive things today. They're the ones that can do impressive things with whatever comes next.

Embracing the Pace

The pace of AI development won't slow down. The landscape won't stabilize. The best practice you implement today will be superseded by something better in months. This is not a problem to be solved—it's a reality to be designed for.

We're not waiting for the technology to mature. We're learning to build systems that can evolve as fast as the technology does.

The question isn't whether to build with AI. It's whether we're brave enough to build systems that acknowledge how quickly AI will change, and humble enough to design for the obsolescence of the choices we made six months ago.

Member discussion