AI and the Intent Gap: Exploring Misunderstandings

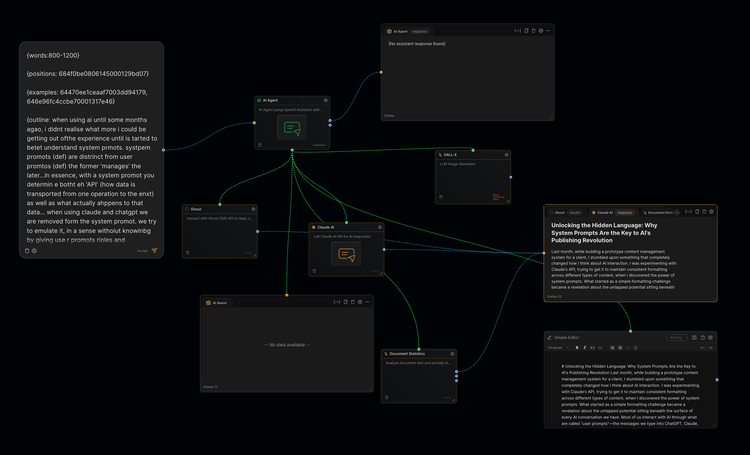

This is the first article I'm publishing that was written with a new AI tool I am developing.

Consider the last time you interacted with a chatbot or AI of some type. Perhaps you asked for "a good movie to watch tonight" from a recommendation site and received suggestions for documentaries about industrial farming when you were hoping for a romantic comedy. Or maybe you prompt an AI with "What should I make for dinner?" when you're tired and just want a simple suggestion. Instead, the chatbot asks about your dietary preferences. These moments of disconnect aren't merely technological hiccups—they're windows into the fundamental challenge of intent interpretation.

The intent gap exists in the space between human communication's rich, contextual nature and AI's current reliance on explicit inputs (text/voice) pattern matching and statistical inference. When humans communicate, we embed layers of meaning: emotional state, cultural context, personal history, immediate circumstances, and unspoken assumptions. We expect our listeners to fill in gaps, read between lines, and understand not just our words but our underlying needs and desires.

Where AI Falls Short

The limitations become particularly apparent in several key areas:

Emotional Intelligence: AI systems often miss the emotional undertones that fundamentally alter meaning. A frustrated "Can you help me?" requires a different response than an excited "Can you help me?" Yet most AI systems focus on the literal request rather than the emotional context that shapes appropriate responses.

Cultural Context: Training data often reflects dominant cultural perspectives, leaving AI systems poorly equipped to understand intentions shaped by different cultural frameworks, communication styles, or social norms.

Temporal Context: Human intentions exist within the flow of ongoing relationships and experiences. What someone needs today might be entirely different from what they needed yesterday, even if they use identical words. AI systems typically lack this temporal awareness and relationship memory.

Implicit Knowledge: Humans communicate with vast amounts of shared, unspoken knowledge. When someone says they need to "get ready for the meeting," they assume the listener understands what "getting ready" entails in their specific context. AI systems often lack this implicit knowledge base.

The Intent Gap in Vibe Coding

When an AI receives a prompt like, for example, "make a login form", it will generate a technically correct login form, complete with proper HTML structure and basic validation. But it misses everything else: the architectural patterns that matter to your team, the security requirements embedded in your company's culture, the subtle contextual clues that this needs to be built fast and dirty for a presentation tomorrow.

This intent gap in vibe coding has become an important challenge in AI's quest to improve how AI coding agents work. Understanding this gap—how it forms, why it persists, and what it might take to close it—reveals a lot of important stuff about the current limitations and future potential of AI in software development.

The Windsurf Gambit

The story of how important this intent gap is to the AI industry, is being visibly played out with a recent corporate bidding war. Windsurf is a tool (an Integrated Development Environment, or IDE) used by developers to write code with the assitance of AI. When OpenAI made a recent play for Windsurf, they weren't just buying a code editor—they were positioning themselves to get data to help close this gap.

Think about what an IDE actually observes: every prompt a developer writes, every line of AI-generated code, every modification they make, every time they pause to think, every debugging session that reveals what went wrong. It's a window into the gap between what developers ask for and what they actually need.

In the end, OpenAI did not acquire Windsurf. In a complex clash of interests and corporate competitiveness, OpenAI’s deal reportedly collapsed due to restrictions imposed by Microsoft, its primary backer. Google stepped in and secured Windsurf’s top executives and research team through a licensing agreement and strategic hires, while Cognition AI acquired the remainder of the company—its product, IP, brand, and most of the team.

However, what makes this coporate battle for Windsurf interesting is its potential for learning about how code is made. Every interaction becomes a lesson in understanding intent. When a developer types "Create a login form" and then immediately starts modifying the AI's output, the system can observe exactly what was missing. Did they add error handling? Adjust the styling to match team conventions? Completely restructure the data flow?

Each modification tells a story. High acceptance rates suggest the AI correctly interpreted intent. Consistent patterns of modification reveal systematic gaps in understanding. Complete rewrites indicate fundamental misunderstanding of requirements. Context switching—when developers stop to gather more information—shows that the original prompt was incomplete.

This creates a very valuable opportunity to close, at least some of, the intent gap through direct observation of developer behavior. It doesn't get everything in the gap, but it might prove to be pretty useful information for makng these systems more productive.

Any organisation that has the tools that can provide this information can potentially solve this problem (in part) through massive data collection, where every developer interaction becomes training data. They'll have access to real-time feedback that immediately correlates intent with satisfaction, contextual learning that understands how the same (or similar) prompt should be interpreted differently across teams and projects, and iterative improvement based on actual developer behavior.

Coda

However, there's another angle worth pondering: Are developers inadvertently training their own replacements by using these AI tools? Your response to this question reveals where you stand on the broader AI spectrum.

Do you believe we're simply teaching AI systems to replace us through our usage patterns? Or do you see a future where demand for code creation will be so vast that we'll swim in better code, requiring more human oversight and creativity than ever?

This question about contributing to our own obsolescence isn't unique to coding—it's a recurring theme across AI and automation. The very act of improving these tools through our interactions might be the mechanism by which they eventually surpass our need for direct involvement.

Member discussion